Attention Mechanism In Transformer

The attention mechanism is designed to focus on different parts of the input data depending on the context . The strength of...

Attention Mechanism:🔗

The attention mechanism is designed to focus on different parts of the input data, depending on the context. In the context of the Transformer model, the attention mechanism allows the model to focus on different words in the input sequence when producing an output sequence. The strength of the attention is determined by a score, which is calculated using a query, key, and value.

The Basic Attention Equation:🔗

Given a Query (), a Key (), and a Value (), the attention mechanism computes a weighted sum of the values, where the weight assigned to each value is determined by the query and the corresponding key.

The attention score for a query and a key is calculated as:

This score is then passed through a softmax function to get the attention weights:

Where is the dimension of the key vectors (this scaling factor helps in stabilizing the gradients).

Finally, the output is calculated as a weighted sum of the values:

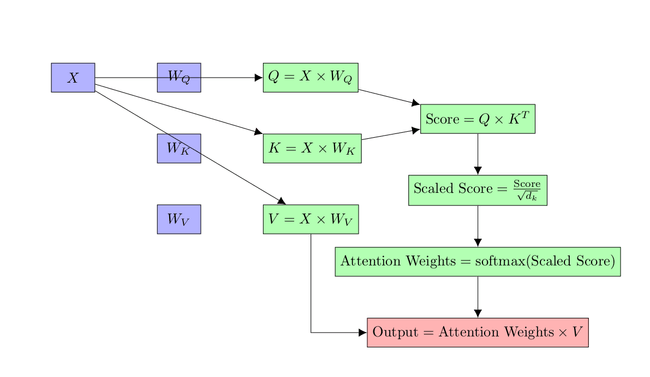

2. Matrix Calculation of Self-Attention:🔗

In practice, we don’t calculate attention for a single word, but rather for a set of words (i.e., a sequence). To do this efficiently, we use matrix operations.

Step 1: Calculate Query, Key, Value matrices🔗

Given an input matrix (which consists of embeddings of all words in a sequence), and weight matrices , , and that we've trained:

Step 2 to 5: Compute the Output of Self-Attention Layer🔗

Given the matrices , , and that we've just computed:

- Calculate the dot product of and to get the score matrix:

- Divide the score matrix by the square root of the depth :

- Apply the softmax function to the scaled score matrix:

- Multiply the attention weights by the value matrix :

This output is the result of the self-attention mechanism for the input sequence.

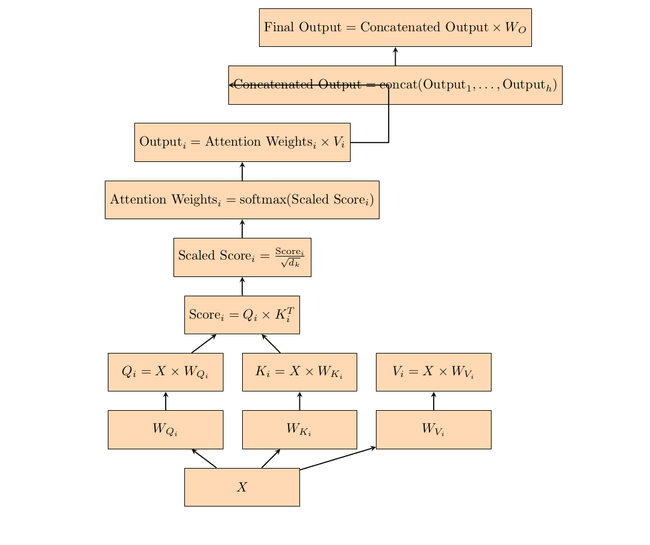

Multi-Head Attention:🔗

In multi-head attention, the idea is to have multiple sets of Query, Key, Value weight matrices. Each of these sets will generate different attention scores and outputs. By doing this, the model can focus on different subspaces of the data.

Let's denote the number of heads as .

-

For each head , we have its own weight matrices: , , and .

-

For each head , compute the Query, Key, and Value matrices just like in single-head attention calculate for each head from i to h.

- Using the , , and matrices, we calculate the output for each head:

Now, after obtaining the output for each head, we need to combine these outputs to get a single unified output.

- Concatenation & Linear Transformation: The outputs from all heads are concatenated and then linearly transformed to produce the final output:

Where is another trained weight matrix.

This multi-head mechanism allows the Transformer to focus on different positions with different subspace representations, making it more expressive and capable of capturing various types of relationships in the data.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!